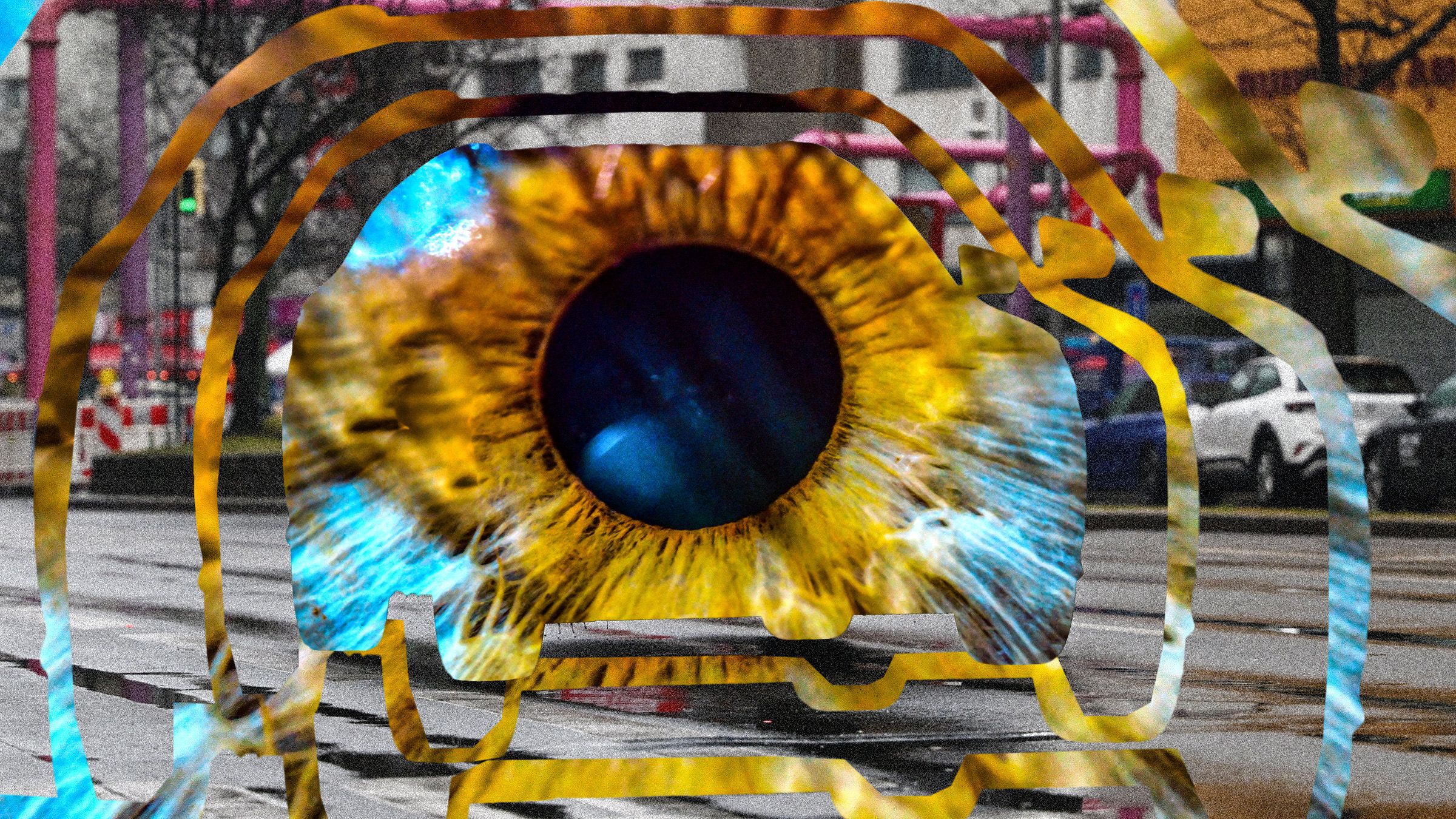

Google Lifts a Ban on Using Its AI for Weapons and Surveillance

Google recently announced that it will be allowing the use of its artificial intelligence technology for military weapons and surveillance purposes.

The company had previously established a policy that prohibited the use of its AI for such applications, citing ethical concerns.

However, Google has faced criticism from shareholders and employees for its involvement in military contracts, prompting the company to reconsider its stance.

The decision to lift the ban has sparked a debate within the tech industry about the responsibility of companies to consider the potential consequences of their technologies.

Some argue that AI can be used for defensive purposes and to minimize collateral damage in warfare, while others warn of the risk of autonomous weapons systems being developed.

Google has stated that it will still review each request for the use of its AI technology for weapons and surveillance on a case-by-case basis to ensure that it aligns with its values.

The company also plans to establish an external advisory council to provide oversight and guidance on the ethical implications of its AI projects.

Despite these measures, critics remain skeptical of Google’s commitment to avoiding contributing to the militarization of AI.

As the debate continues, it remains to be seen how other tech companies will approach the issue of allowing the use of AI for military and surveillance purposes.

Ultimately, the decision by Google to lift its ban on using AI for weapons and surveillance raises important questions about the ethical use of advanced technologies in society.